Print (quad(inner_integral_for_one_v,np.NINF, 1)) Inner_integral_for_one_v = inner_integral(0.8) # now to what the OP inner_integral(v,x):

from toolz import curryįrom scipy.stats import multivariate_normal It also makes it possible to take the v parameter 'outside' the other calculations. This makes it possible to calculate the inner integral as a function of x. I curry functions in two places so that functions have only single parameters. I use a variance-covariance matrix to get the desired correlation of 0.4 and avoid writing my own pdf. I wanted to verify that my approach would correctly calculate the volume under the density. The first few lines, up to 'this should produce 1', are a sanity check. Also, my goal is to compute the integral for a few hundreds different values of v.Īlthough it's slow this approach seems to work.

Note that the actual upper bound f(x,v) = 0.5*v*x I want to compute is way more complicated, this is just as an example, therefore I do not want to compute it symbolically, for instance with sympy. Results = would work? Other approaches to tackle the problem also welcome.Įdit: This is the integral I want to compute, where f(x,y) is the bivariate normal density function. Results = Īny ideas on how I could change my approach so the call to upper_bound(x, v) in # Calculate bivariate normal CDF for different values of v in the upper bound # Upper bound function of 2 variables example where x is an integral variable Prob, error = integrate.dblquad(f, np.NINF, a, lambda x: np.NINF, b) # Define N2(a, b) as the cumulative bivariate normal distribution f where a is constant # First define f(x, y) as the bivariate normal distribution with fixed correlation p Filomat.I am trying to find an elegant way to calculate a bivariate normal CDF with python where one upper bound of the CDF is a function of two variables, of which one is a variable of the bivariate normal density (integral variable). "Upward and Downward Statistical Continuities". For instance Kuiper's test might be used to see if the number of tornadoes varies during the year or if sales of a product vary by day of the week or day of the month. The closely related Kuiper's test is useful if the domain of the distribution is cyclic as in day of the week. The Kolmogorov–Smirnov test is based on cumulative distribution functions and can be used to test to see whether two empirical distributions are different or whether an empirical distribution is different from an ideal distribution. Such tests can assess whether there is evidence against a sample of data having arisen from a given distribution, or evidence against two samples of data having arisen from the same (unknown) population distribution. The empirical distribution function is a formal direct estimate of the cumulative distribution function for which simple statistical properties can be derived and which can form the basis of various statistical hypothesis tests.

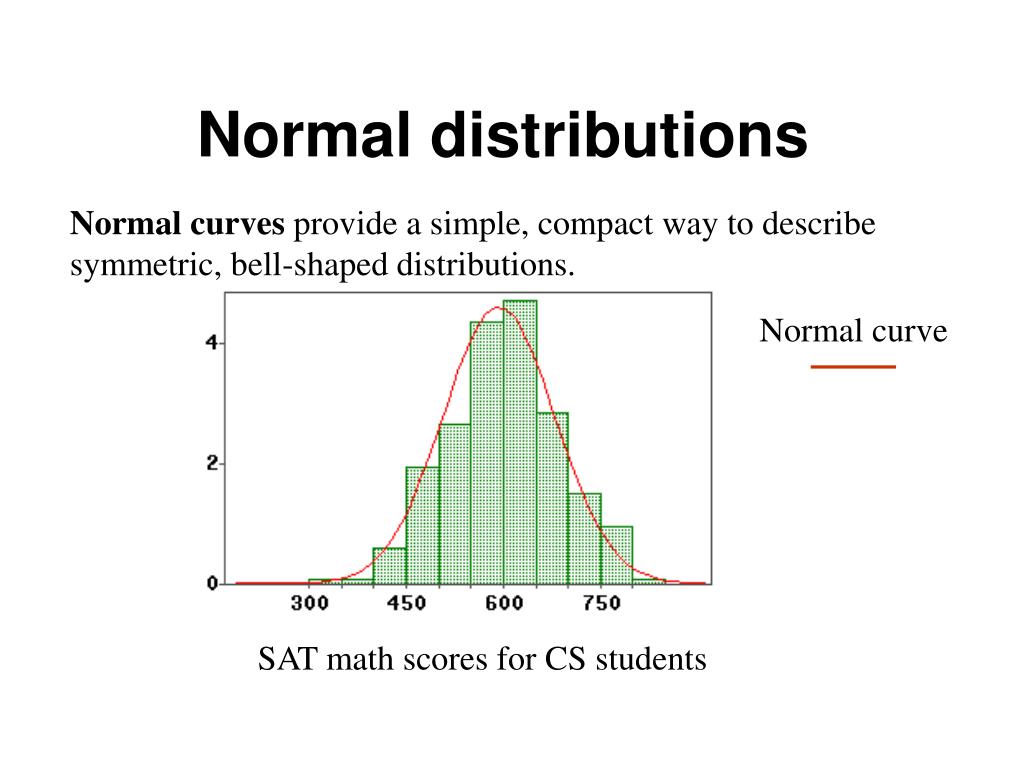

Cumulative frequency analysis is the analysis of the frequency of occurrence of values of a phenomenon less than a reference value. The concept of the cumulative distribution function makes an explicit appearance in statistical analysis in two (similar) ways. In probability theory and statistics, the cumulative distribution function ( CDF) of a real-valued random variable X. Cumulative distribution function for the normal distribution

0 kommentar(er)

0 kommentar(er)